This is part of a series of posts explaining cryptic tech terms in an introductory way.

Disclaimer: this series is not intended to be a main learning source. However, there might be follow up posts with hands-on experiments or deeper technical content for some of these topics.

Motivation

This post extends on a problem from our previous post about rendezvous hashing, Assume you have a system consisting of a cluster of serving nodes that simply takes a request from a client and routes it to the correct back-end node after altering some aspects of that request.

Using rendezvous hashing gives us a node we should talk to (the top of the list Cn) but it also gives us an ordering for fail-over if the node failed. This is very interesting because it didn't only solve the load balancing fail-over issue but it also established whats called distributed k-agreement. Imagine a write request coming to the nodes, this write need to be replicated across nodes in some way so if the node that received the write request failed, the client can fail over to the next. In our scenario, if the client and serving nodes share the same H(Sn, X), nodes can decide the order of replication and create a replication chain that follow the same order as how the client would fallback. Assume we have a discovery service that basically just keeps track of all the nodes around, all members can distributively adapt to changes in the nodes structure without having to communicate with the other nodes.

So my previous post covered what the client should do if a serving node failed and what the serving nodes should do if one of them failed. Yet, without a proper way to know serving node failed as soon as it failed, we can arguably imagine the cluster shrinking back into a single node taking all client traffic.

Think of it this way: All clients will have some ordering of N nodes, N-1 failed and a single working node.

It seems we need a way for nodes to learn about other nodes state and event and act accordingly. But how can we do that?

You know the drill. Take a moment before scrolling down.

Use all the health checks!

Well, yes and no. Health checking is a very nice feature that allows some node ( supervisor node?) to probe other nodes through network link to make sure those nodes are /healthy/ whatever that means. It can be as simple as pinging a port and see if it responds, or calling an RPC endpoint that does health diagnostics and return results of the node health. sometimes in critical systems, both types of health checks can be mixed, you can have a more frequent but lightweight health check and a less frequent but more involved diagnostics check. It's usually used in load balancers to decide if a node is healthy enough to send traffic to. What's different in our case is that we don't have a supervisor node. All nodes are both normal serving nodes but they are collectively responsible for the overall availability of the system. Additionally, like any distributed system, nodes can lose communication through network partitions (think of network partitions as your phone temporary disconnecting when passing through a tunnel, you know once your out of the tunnel things will get better but for the time being, you are cut off)

So maybe health checks are not the best way to go. What else can we do here?

You know the drill. Take a moment before scrolling down.

Use all the heart beats!

Let's try to analyse this idea. All the nodes know about all the other nodes, every N units of time, the node calls all the nodes it knows about and say "hey I'm fine", to which the nodes respond "Oh, good to know you are fine. I'll make sure to remember that for the next N units of time"

How can this friendly banter ever break systems?

You know the drill. Take a moment before scrolling down.

This can be /okay/ on 3-4 nodes. But consider the amount of CPU bounded work nodes need to do just for health checks, even worse consider the amount of bandwidth these health checks will imply on the network. For N nodes, there will be N² messages floating around just for health checks. Furthermore, because CPUs and network links between these nodes are at different loads, this will cause time deviation in the tickers between the nodes and what you'll end up seeing is a constant surge of health checks coming in at all times. There is more technically involved discussions on why many engineers believe that heart beats are rarely a good choice for health checks but that's a topic for another time.

So, what do we do then?

Gossip protocol is a standard protocol for leaderless distributed systems. Many distributed systems rely heavily on Gossip protocol for failure detection and message broadcasting. It follows a very simple model. Imagine you are in your cubicle in an office and you just heard some bad news about a colleague K who got fired (unhealthy node). You, being an active person in the office gossip culture, want to spread that news but immediately realize it's very inefficient going to every single person telling them what you know (heart beats) and it's also inefficient to wait for people to come to your desk (health checks) so you decide to let the folks around you about the news. You rely on the social contract that those nodes are equally active in the office gossip culture and will spread this message across the office like an infection. now everyone knows that K got fired and they won't share data with them (replication) and won't ask them to deliver on their tasks (serving traffic). Well this is a fun analogy but it doesn't cover all of the core concepts of gossip so let's dive in:

Continuous communication

Gossip relies on the idea that communication is continuous and periodic. You don't just talk to Lisa when someone is getting fired, you talk periodically about everything that's happening in the office so that Lisa knows you are okay. If Lisa doesn't hear from you for a while and no one else is mentioning they saw you in the office, Lisa will assume you are on leave or might've gotten fired yourself.

Each node maintains a state of propagated news. The state can include, list of nodes I heard from in the last N time units (heard from means either they talked to me directly, or someone who talked to me have them in their tracked list) as well as some diagnostic data that we won't cover here.

Neighboring v. Random

One of the decisions engineers make when implementing gossip is how to pick the nodes for next step of gossip. This can be either a random peer selection or a more deterministic neighboring peer selection. Imagine you have some gossip you want to share, you have two options: either share with neighboring cubicles, those are folks you know for quite sometime and you probably have a closer bond. You can rely on their availability more than others (less probability of a network partition) and it makes the gossip spread more predictably (if all nodes picked the two most neighboring nodes, you can simulate a gossip graph deterministically)

The other option is to select nodes randomly from all the nodes in the cluster. One of the arguments for that approach is that it increases the chance of a faster spread across different network links. Imagine you have multiple availability zones, if you go with neighboring peer selection, gossip will only hop to availability zone B after everyone in availability zone A learned the news. That's not optimal for disaster recovery. even worse, imagine the delay between the gossip originating in availability zone A to be picked up by nodes in availability zone C.

I've seen implementations of a hybrid approach where a middleman determines whether this piece of news should be propagated randomly or to neighboring nodes based on the news content. Of course, this adds complexity to an already complex protocol as well as a round trip latency. One approach is to only do the round trip when in doubt. i.e: if all good, use neighboring, if there is a disaster, call the service to do a more informed peer selection.

Networking Protocol

While usually this protocol is implemented in higher communication stacks, due to the nature of drop resilient communication and low message overhead, sometimes they are implemented in more efficient communication protocols i.e multicast for a much lower overhead on the network and of course less guarantees on delivery.

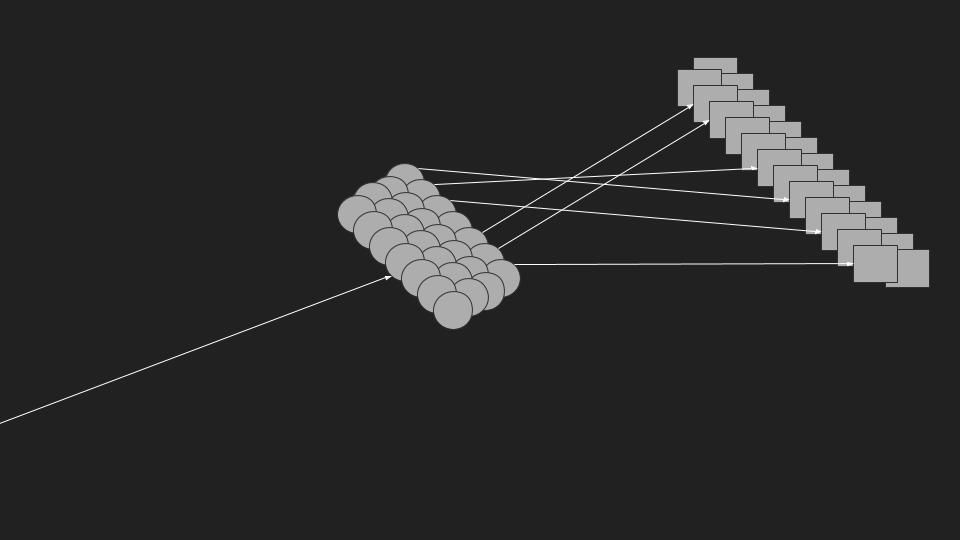

Because every node only calls a subset k of the nodes with gossip (usually 2). The gossip will propagate through the cluster in Log₂N time.

Disadvantages of using Gossip Protocols

- Standard implementations of Gossip protocols rely heavily on the idea that nodes are not malicious. If you opt in for a standard implementation, this means one of the nodes clusters can abuse the system if it was breached by hackers. Security-aware Gossip protocol implementations trade off simplicity and overall latency for a more secure approach.

- Standard implementations of Gossip protocols have weak ordering guarantees, which means, due to latency and uneven load distribution among other things, your system can be prune to having two (or more) views of the worlds simultaneously. This is known as "split brain" problem. As long as implementation have guarantees for eventual consistency and the system is designed to tolerate eventual consistency this should be fine but it adds multiple considerations when extending the system.

Who uses Gossip?

Gossip is being used by a large number of distributed systems. Cassandra, Consul, CockroachDB, DynamoDB among the most well know. Also most blockchain-based projects rely on gossip protocols to broadcast new gossip to participating nodes in the peer network.

Further readings

- SWIM

- Gossip seed nodes

- Gossip Topology-aware peer selection

- Modeling gossip fan out configuration

- Pull-based Gossip

- HashiCorp memberlist